Quasi-random, more or less unbiased blog about real-time photorealistic GPU rendering

Friday, January 28, 2011

Update 5 Cornell Box Pong: looking for help

New videos of the Brigade real-time path tracer!

Minecraft level with HDR skydome lighting (this time with global illumination) (24 spp):

http://www.youtube.com/watch?v=wAGEZnFVTos

simple scene with HDR skydome lighting and refraction (24 spp):

http://www.youtube.com/watch?v=A4bkT-QTQXY

Buddha and Dragon scene, multi-bounce lighting (8spp):

http://www.youtube.com/watch?v=3qvdO4TxU-8

Escher scene, lots of areas with indirect lighting (8 spp):

http://www.youtube.com/watch?v=tLREB7dp1F4

Same Escher scene but with significantly reduced noise (8 spp):

http://www.youtube.com/watch?v=lGnvuL8kq_Q

Especially the last video quite convincingly shows that real-time path tracing for games (at moderate framerates and resolution) is almost ready for prime time!

Tuesday, January 25, 2011

Update 4 on Cornell Box Pong

And 2 youtube videos:

http://www.youtube.com/watch?v=Ze1U8X4Awuc

http://www.youtube.com/watch?v=rCqXd6bAw0k

Download scene (needs tokaspt to run)

- refraction in the glass sphere in front

- reflection on curved surfaces

- diffuse interreflection, showing color bleeding on the background spheres and on the Pong ball

- soft shadows behind the background spheres and under the Pong ball when touching the ground

- true ambient occlusion (no fakes as used by SSAO, or the much better quality AOV) when the balls approach ceiling or floor

- indirect lighting (ceiling, parts of the back wall in shadow)

- anti-aliasing (multiple stochastic samples per pixel)

Now I want to focus on getting the game code ready.

Update: I've made the room higher and all the walls, celing and floor are now convex, which should simplify the collision detection:

Sunday, January 23, 2011

Update 3 on Cornell Box Pong

Update 2 on real-time path traced Cornell Box Pong

These are the frames making up the animation in full "simulated real-time"quality (rendered for about 3 seconds on my laptop with GeForce 8600GT M, should render in less then 100 milliseconds on a GTX580):

Friday, January 21, 2011

Update 1 on the real-time path traced Cornell Box Pong

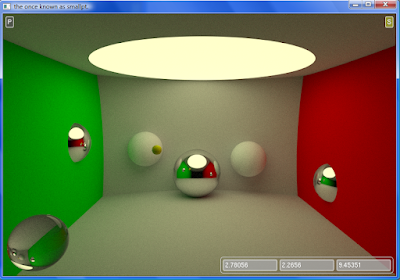

Here's a screenshot of the scene:

Everything you see in the picture is either a sphere or a part of a (very large) sphere. Ceiling, floor, side walls and back wall are in fact huge intersecting spheres, giving the impression of planes. The circular light source in the ceiling is also a part of a light emitting sphere protruding through the ceiling. The Pong bats are also parts of spheres protruding through the side walls. I included some diffuse spheres to show off the color bleeding and the obligatory reflective sphere as well.

I ran into trouble making the Pong bats as described in my previous post, so I decided to make the bats by using just one sphere per bat instead of two. The sketch below shows how it’s done:

In order to make the path traced image converge as fast as possible (in under 100 milliseconds to allow for some playability), I made the lightsource in the ceiling bigger. I think you should be able to get 30 fps in this scene on a GTX 580 and with 64 samples per pixel per frame at the default resolution. (If you have one of these cards or another Fermi-based card, please leave a comment with your performance statistics, press "p" in tokaspt to see the fps counter and change the spppp)

The above Pong scene can be downloaded here: http://www.2shared.com/file/PqfY-sBV/pongscene.html Place the file in the tokaspt folder and open it from within tokaspt by pressing F9. You also need tokaspt and a CUDA enabled GPU.

On to the gameplay, which still needs to be implemented. The gameplay mechanics are extremely simple: all the movement of the spheres happens in 2D, just like in the original Pong game: the ball is moving in a vertical plane between the Pong bats and the bats can only move up or down. Only 3 points need to be changed per frame: the centers of the 2 spheres making up the bats (only up and down) and the center of the blue ball. The blue ball bounces off the ceiling and the floor in the direction of the side walls. If the player or the computer fails to bounce the ball back with the bats and the ball hits the red sphere (red wall) or the green sphere (green wall) the game is lost for that player and another game begins. Since everything is happening in 2D this is just a matter of simple collision detection calculation between two circles. There are plenty of 2D Pong games on the net with open source code (single player and multi-player), so I only have to copy one of those and change the tokaspt source. Should be a piece of cake, except that I haven't done anything like this before :)

Monday, January 17, 2011

Real-time path traced Cornell Box Pong

The plan is as follows: use the Cornell box as the "playing area". The bouncing ball will be a diffuse or specular or light emitting sphere. The rectangular boxes cannot be made out of triangles (tokaspt only supports spheres as primitive) and should instead be made out of the intersection of two intersecting spheres, creating a lens-shaped object as in the picture below (grey part):

The "boxes" will thus have curved surfaces on which the ball can bounce off:

Potential problems:

- I have very little programming experience

- my development hardware is ancient (GT 8600), but even with this old card I can get very fast convergence in the scenes included in tokaspt

- making the gameplay code work and above all fun: 2D physics with collision detection of ball with the lens-shaped boxes (the ping pong bats), ceiling and floor and the ball bouncing back and forth between boxes at progressive speeds to steadily increase difficulty level (I found some opensource code for a basic Pong game here so this part shouldn't be too difficult)

- all the code should be executed on the GPU

Let's see how far I can get this. Hopefully some screenshots will follow soon.

Saturday, January 15, 2011

Nvidia's Project Denver to appear first in Maxwell GPU in 2013

Nvidia is not providing much in the way of detail about Project Denver, but Andy Keane, general manager of Tesla supercomputing at Nvidia, told El Reg that Nvidia was slated to deliver its Denver cores concurrent with the Maxwell series of GPUs, which are due in 2013. As we previously reported, Nvidia's Kepler family of GPUs, implemented in 28 nanometer processes, are due this year, delivering somewhere between three and four times the gigaflops per watt of the current "Fermi" generation of GPUs. The Maxwell GPUs are expected to offer about 16 times the gigaflops per watt of the Fermi. (The Register is wrong here, the chart actually showed 16x Gigaflops per Watt over Tesla or GT200 cards)(Nvidia has not said what wafer baking process will be used for the Maxwells, but everyone is guessing either 22 or 20 nanometers).

While Keane would not say how many ARM cores would be bundled on the Maxwell GPUs, he did confirm that Nvidia would be putting a multicore chip on the GPUs and hinted that it would be considerably more than the two cores used on the Tegra 2 SoCs. "We are going to choose the number of cores that are right for the application," says Keane.

A multicore ARM CPU integrated into the GPU, nice!

Which algorithm is the best choice for real-time path tracing?

instant radiosity

- fast

- only useful for diffuse and semi-glossy scenes

- performance deteriorates quickly in glossy scenes

- many artefacts due to light bleeding through, singularity effects, clamping, ...

unidirectional path tracing (PT)

- best for exteriors (mostly direct lighting)

- not so good for interiors with much indirect lighting and small light sources

- very slow for caustics

bidirectional path tracing (BDPT)

- best for interiors (indirect lighting, small light sources)

- fast caustics

- very slow for reflected caustics

Metropolis light transport (MLT) + BDPT

- best for interiors (indirect lighting, small light sources)

- especially useful for scenes with very difficult lighting (e.g. through a keyhole, light splitting through prism)

- faster for reflected caustics

energy redistribution path tracing

- mix of Monte Carlo PT and MLT

- best for interiors (indirect lighting, small light sources)

- much faster than PT for scenes with very difficult lighting (e.g. light coming through a small opening, lighting the scene indirectly)

- fast caustics

- not so fast for glossy materials

- problems with detailed geometry

photon mapping

- best for indoor scenes

- biased, artefacts, splotchy, low frequency noise

- fast, but not progressive

- large memory footprint

- very useful for caustics + reflected caustics

stochastic progressive photon mapping

- best for indoor

- fast and progressive

- very small memory footprint

- handles all kinds of caustics robustly

I also found this comment from vlado (V-Ray developer) on the V-Ray forums regarding Metropolis light transport:

BDPT with quasi Monte Carlo (QMC) for indoor and PT with QMC for outdoor scenes seem to be the best candidates for real-time pathtraced games. Two-way path tracing could be a very interesting alternative as well. Caustics are a nice effect for perfectly physically correct rendering, but are really not that important in most scenes and can generally be ignored for real-time purposes, where convergence speed is of uttermost importance."I came to the conclusion that MLT is way overrated. It can be very useful in some special situations, but for most everyday scenarios, it performs (much) worse than a well-implemented path tracer. This is because MLT cannot take advantage of any sort of sample ordering (e.g. quasi-Monte Carlo sampling, or the Schlick sequence that we use, or N-rooks sampling etc). A MLT renderer must fall back to pure random numbers which greatly increases the noise for many simple scenes (like an open skylight scene)."

Friday, January 14, 2011

Carmack excited about Nvidia's Project Denver, continues ray tracing research

From his twitter acount:

"I have quite a bit of confidence that Nvidia will be able to make a good ARM core. Probably fun for their engineers."

"Goal for today: parallel implementation of my TraceWorld Kd tree builder"

"10mtri model got 2.5x faster on 1 thread, 19x faster on 24 (hyper)threads."

"Amdahl’s law is biting pretty hard at the start, with only being able to fan out one additional thread per node processed."

"The speedup of a program using multiple processors in parallel computing is limited by the time needed for the sequential fraction of the program. For example, if 95% of the program can be parallelized, then the theoretical maximum speedup using parallel computing would be 20 times faster, no matter how many processors are used. "

"so I’m going through a couple of stages of optimizing our internal raytracer, (TreeWorld used for precomputing the lightmaps and megatextures, not for real-time purposes) this is making things faster and the interesting thing about the processing was, what we found was, it’s still a fair estimate that the GPUs are going to be five times faster at some task than the CPUs. But now everybody has 8 core systems and we’re finding that a lot of the stuff running software on this system turned out to be faster than running the GPU version on the same system. And that winds up being because we get killed by Amdahl’s law there where you’re throwing the very latest and greatest GPU and your kernel amount (?) goes ten times faster. The scalability there is still incredibly great, but all of this other stuff that you’re dealing with of virtualizing of textures and managing all of that did not get that much faster. So we found that the 8 core systems were great and now we’re looking at 24 thread systems where you’ve got dual thread six core dual socket systems. It’s an incredible amount of computing power and that comes around another important topic where PC scalability is really back now "

Monday, January 10, 2011

Arnold render to have full GPU acceleration in a few years

Some excerpts from the interview:

"The first target for that backend is the CPU, and that’s what we’re using now in production. But the design goals of OSL include having a GPU backend, and if you were to browse on the discussion lists for OSL right now, you would see people working on GPU-accelerated renderers. So that could happen in future: that a component of the rendering could happen on the GPU, even for something like Arnold."

"it doesn’t make sense to cram the kinds of scenes we throw at Arnold every day, with tens of thousands of piece of geometry and millions of textures, at the GPU. Not today. Maybe in a few years it will."

Arnold render is a unidirectional path tracer, so it makes a perfect fit for acceleration by GPUs. "Maybe in a few years it will" could be a reference to Project Denver. When Project Denver materializes in future high-end GPUs from Nvidia, there will be a massive speed-up for production renderers like Arnold and other biased and unbiased renderers. The implications for rendering companies will be huge: all renderers will become greatly accelerated and there will no longer be a CPU rendering camp and a GPU rendering camp. Everyone will want to run their renderer on this super-Denver-chip. GPU renderers like Octane, V-Ray RT GPU and iray will have a headstart on this new platform. Real-time rendering (e.g. CryEngine 4) and offline rendering (e.g. Arnold) will converge much faster since they will be using the same hardware.

AMD and Intel will not sit still and recently launched Fusion and Sandy Bridge, which basically follow the same philosophy as project Denver, but coming from the other side: while Nvidia is adding CPU cores to the GPU, AMD and Intel are adding GPU cores to the CPU. Which approach is better remains to be seen, but I think that Nvidia will have the better performing product as usual. Eventually there will no longer be a distinction between CPUs and GPUs, since they will all be merged on the same chip: a few latency-optimized cores (today's CPU cores) which process the parts of the code that are inherently serial and are impossible to parallellize and thousands of throughput-optimized cores (today's GPU cores or stream processors), which handle the parallel parts of the code, all on the same chip using the same shared memory pool.

The coming years will be very exciting for offline and real-time graphics, in particular for raytracing based rendering. Photon mapping for example is a perfect candidate that could become real-time in a couple of years.

Thursday, January 6, 2011

Nvidia is building its own CPU!!!

Bill Dally, chief scientist at Nvidia, already hinted that future GPUs from Nvidia will be incorporating ARM-based CPU cores on the same chip as the GPU. Now it's official (Project Denver will first appear in the Maxwell GPU, see http://raytracey.blogspot.com/2011/01/nvidias-project-denver-to-appear-first.html)! There's an interesting blog post from Bill Dally on http://blogs.nvidia.com/2011/01/project-denver-processor-to-usher-in-new-era-of-computing/. Some paragraphs which are relevant to GPU ray tracing/path tracing:

"As you may have seen, NVIDIA announced today that it is developing high-performance ARM-based CPUs designed to power future products ranging from personal computers to servers and supercomputers.

Known under the internal codename “Project Denver,” this initiative features an NVIDIA CPU running the ARM instruction set, which will be fully integrated on the same chip as the NVIDIA GPU. This initiative is extremely important for NVIDIA and the computing industry for several reasons.

NVIDIA’s project Denver will usher in a new era for computing by extending the performance range of the ARM instruction-set architecture, enabling the ARM architecture to cover a larger portion of the computing space. Coupled with an NVIDIA GPU, it will provide the heterogeneous computing platform of the future by combining a standard architecture with awesome performance and energy efficiency."

"An ARM processor coupled with an NVIDIA GPU represents the computing platform of the future. A high-performance CPU with a standard instruction set will run the serial parts of applications and provide compatibility while a highly-parallel, highly-efficient GPU will run the parallel portions of programs."

I wonder what Intel's and AMD's answer will be. High-end versions of Fusion and Sandy Bridge/LRB/Knight's Ferry? Either way, it's clear that all of "the big 3" are now pursuing CPU/GPU hybrid chips. Bidirectional path tracing, Markov chain Monte Carlo rendering methods (such as Metropolis light transport and ERPT) and photon mapping will benefit enormously in performance on these hybrid architectures because, being partially sequential, these algorithms are par excellence an ideal match for these hybrid chips (but with clever parallellization tricks they can already run fast on current GPUs, see MLT on GPU and photon mapping on GPU). Very complex procedural shaders will run much faster and superfast acceleration structure rebuilding (which is inherently sequential but can be parallellized to a great extent) will allow real-time ray tracing of thousands and even millions (see HLBVH paper by Pantaleoni and Luebke) of dynamic objects simultaneously. GPU and CPU will share the same memory pool, so no more slow PCIe transfers needed. Project Denver is in essence exactly what Neoptica (a think tank group of top graphics engineers acquired by Intel in 2007) had in mind (http://pharr.org/matt/talks/graphicshardware.pdf). The irony is that Neoptica's vision was intended for Larrabee, but now it's Nvidia that will make it real with the Denver project.

With Nvidia soon producing its own CPUs, competition will become fierce. From now on, Nvidia is not just a GPU company anymore, but is targetting the same PC crowd as Intel and AMD. The concepts of "GPU" and "CPU" will slowly vanish in favor of hybrid architectures, like LRB, Fusion and future Nvidia products (Keppler/Maxwell???). And there is also Imagination Technologies which will incorporate hardware accelerated ray tracing in PowerVR GPUs. Exciting times ahead! :-)